Hanyue Lou, Jinxiu Liang, Minggui Teng, Bin Fan, Yong Xu, and Boxin Shi

In Advances in Neural Information Processing Systems, 2024

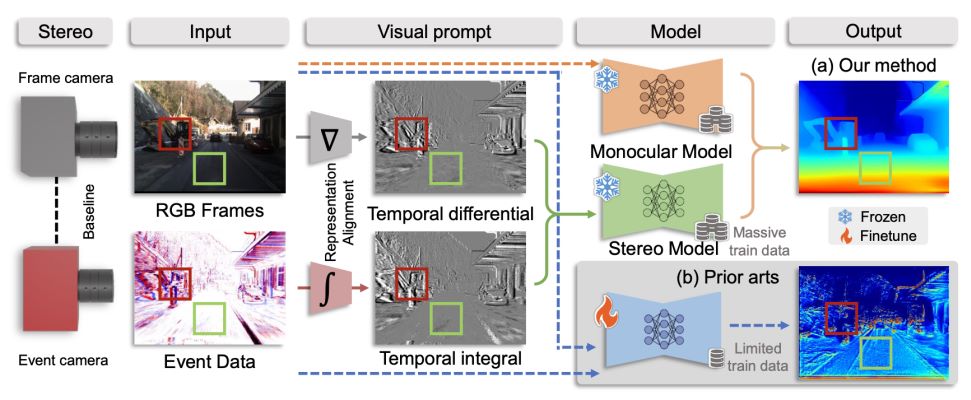

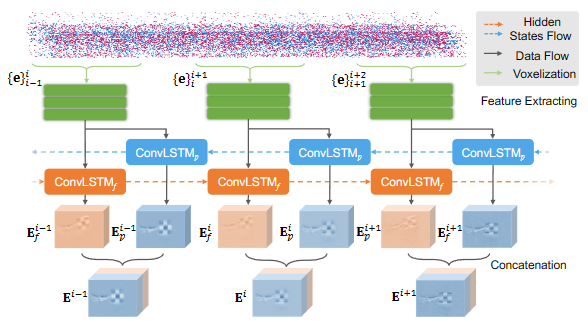

Event-intensity asymmetric stereo systems have emerged as a promising approach for robust 3D perception in dynamic and challenging environments by integrating event cameras with frame-based sensors in different views. However, existing methods often suffer from overfitting and poor generalization due to limited dataset sizes and lack of scene diversity in the event domain. To address these issues, we propose a zero-shot framework that utilizes monocular depth estimation and stereo matching models pretrained on diverse image datasets. Our approach introduces a visual prompting technique to align the representations of frames and events, allowing the use of off-the-shelf stereo models without additional training. Furthermore, we introduce a monocular cue-guided disparity refinement module to improve robustness across static and dynamic regions by incorporating monocular depth information from foundation models. Extensive experiments on real-world datasets demonstrate the superior zero-shot evaluation performance and enhanced generalization ability of our method compared to existing approaches.

In Advances in Neural Information Processing Systems, 2024

In Advances in Neural Information Processing Systems, 2024 In Proc. of Conference on Computer Vision and Pattern Recognition, 2023

In Proc. of Conference on Computer Vision and Pattern Recognition, 2023 In Proc. of European Conference on Computer Vision, 2022

In Proc. of European Conference on Computer Vision, 2022